Introduction

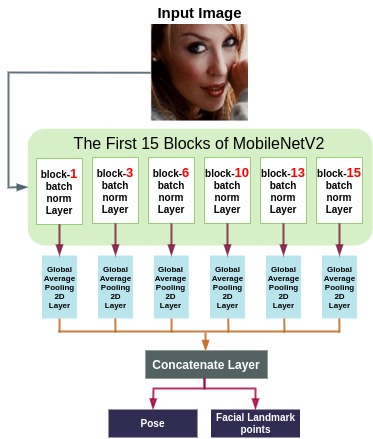

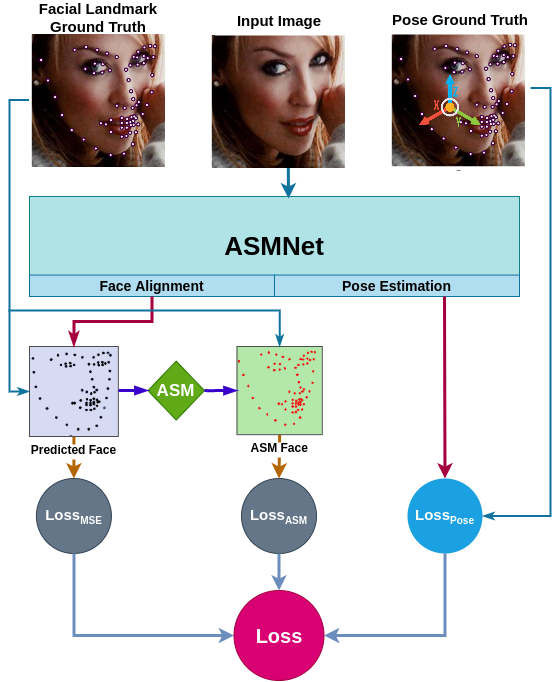

ASMNet is a lightweight Convolutional Neural Network (CNN) designed for efficient face alignment and pose estimation with acceptable accuracy. ASMNet is inspired by MobileNetV2, modified to be suitable for face alignment and pose estimation while being about 2 times smaller in terms of the number of parameters. Moreover, inspired by Active Shape Model (ASM), an ASM-assisted loss function is proposed to improve the accuracy of facial landmark points detection and pose estimation.

ASMnet Architecture

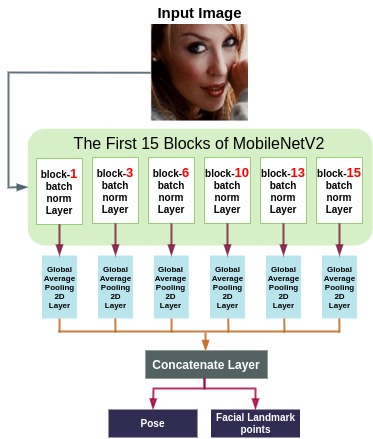

Features in a CNN are distributed hierarchically. In other words, the lower layers have features such as edges, and corners which are more suitable for tasks like landmark localization and pose estimation, and deeper layers contain more abstract features that are more suitable for tasks like image classification and image detection. Furthermore, training a network for correlated tasks simultaneously builds a synergy that can improve the performance of each task.

Having said that, we designed ASMNet by fusing the features that are available in different layers of the model. Furthermore, by concatenating the features that are collected after each global average pooling layer in the back-propagation process, it will be possible for the network to evaluate the effect of each shortcut path. The following is the ASMNet architecture:

The implementation of ASMNet in TensorFlow is provided in the following path:

ASMNet TensorFlow Implementation

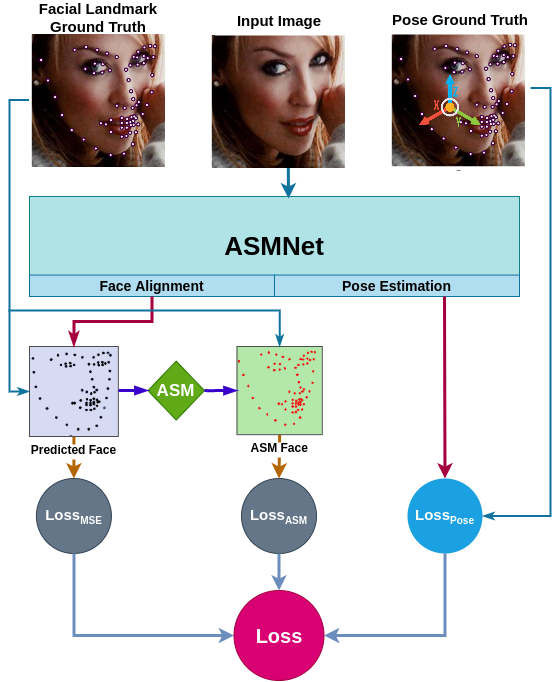

ASM Loss

We proposed a new loss function called ASM-LOSS which utilizes ASM to improve the accuracy of the network. In other words, during the training process, the loss function compares the predicted facial landmark points with their corresponding ground truth as well as the smoothed version of the ground truth which is generated using ASM operator. Accordingly, ASM-LOSS guides the network to first learn the smoothed distribution of the facial landmark points. Then, it leads the network to learn the original landmark points. For more detail, please refer to the paper.

Evaluation

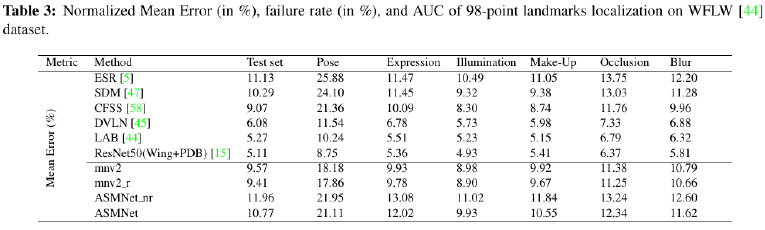

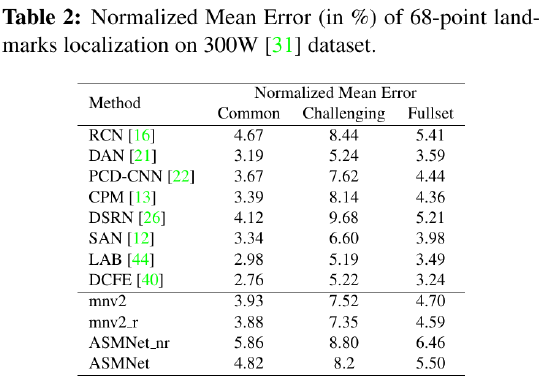

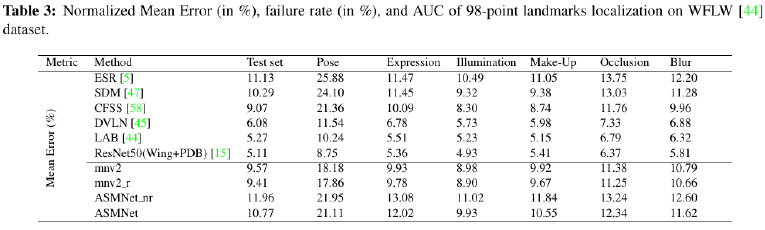

As you can see in the following tables, ASMNet has only 1.4 M parameters which is the smallest comparing to similar Facial landmark points detection models. Moreover, ASMNet is designed to perform Face alignment as well as Pose estimation with a very small CNN while having an acceptable accuracy.

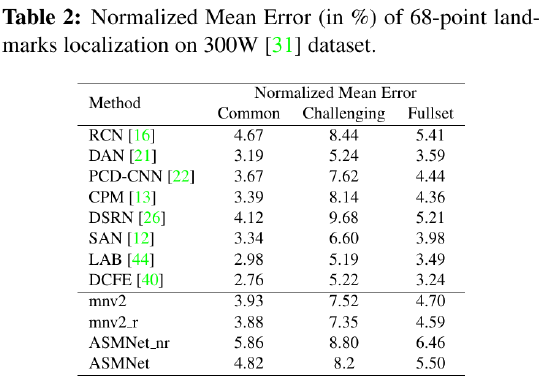

Although ASMNet is much smaller than the state-of-the-art methods on face alignment, its performance is also very good and acceptable for many real-world applications:

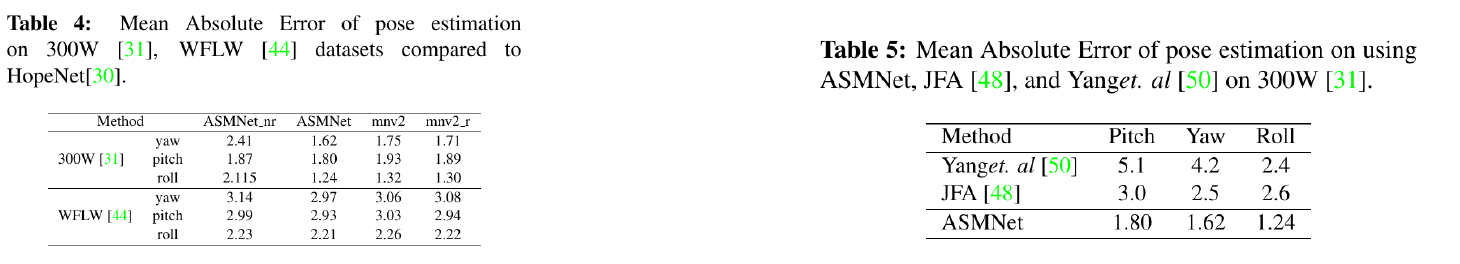

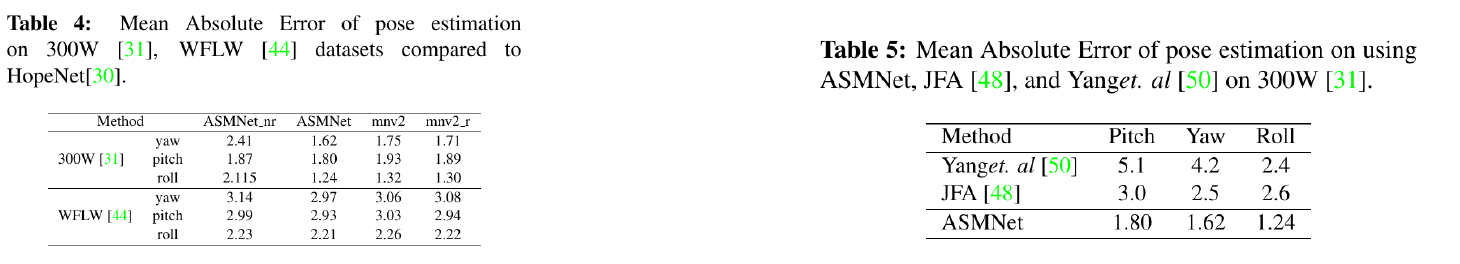

As shown in the following table, ASMNet performs much better than the state-of-the-art models on 300W dataset on Pose estimation task:

Visual Performance

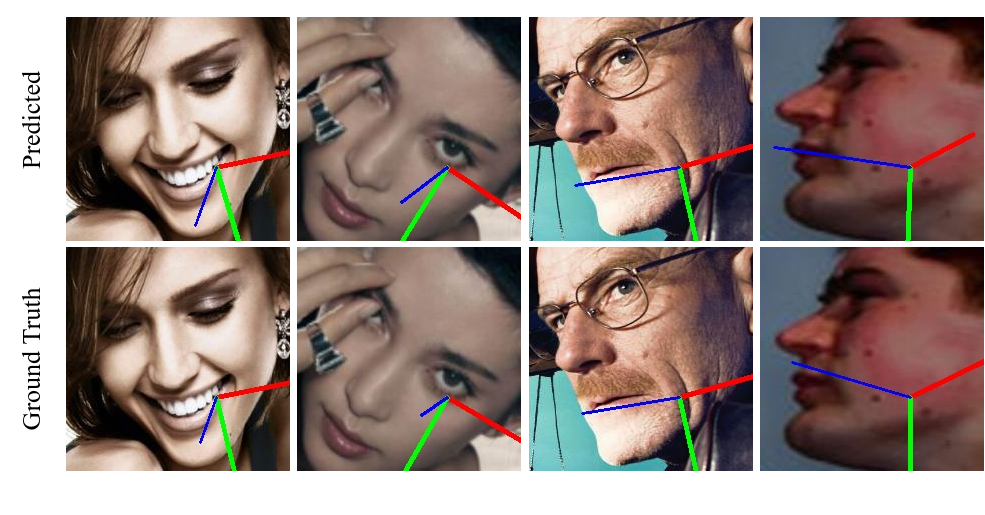

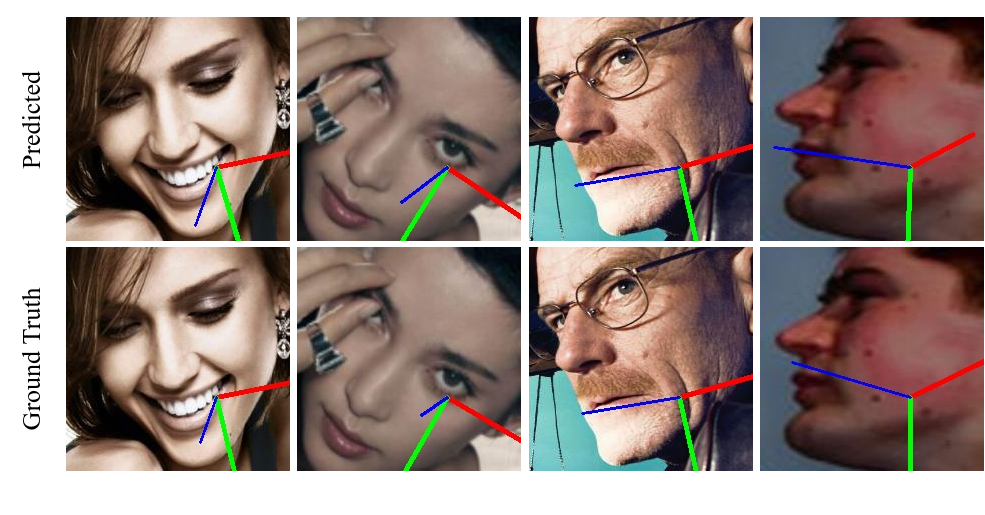

Following are some samples to show the visual performance of ASMNet on 300W and WFLW datasets:

The visual performance of Pose estimation task using ASMNet is very accurate and the results are also much better than the state-of-the-art pose estimation over 300W dataset: